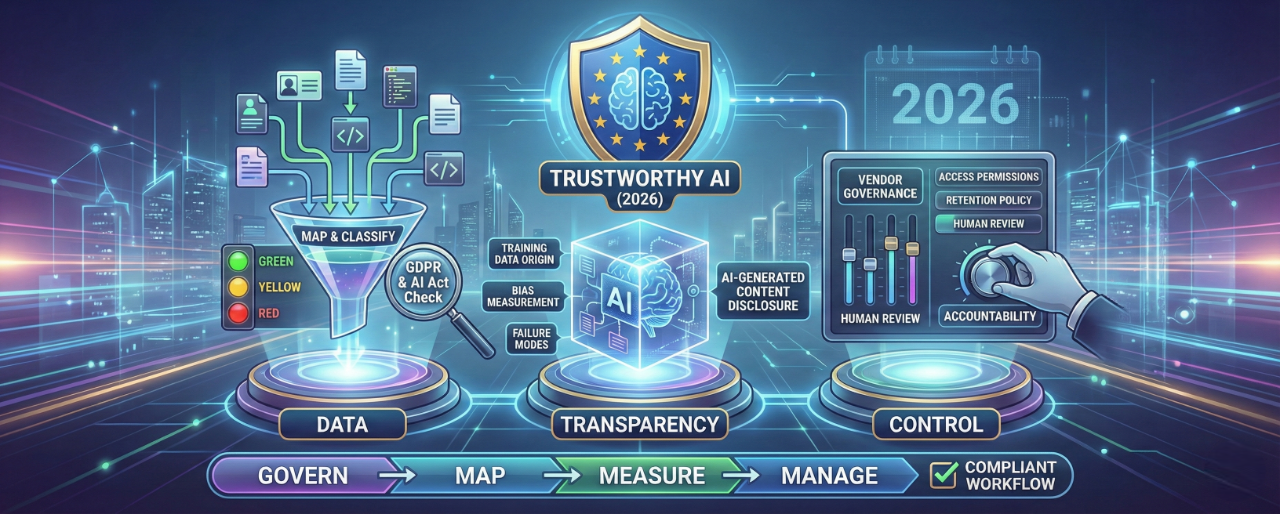

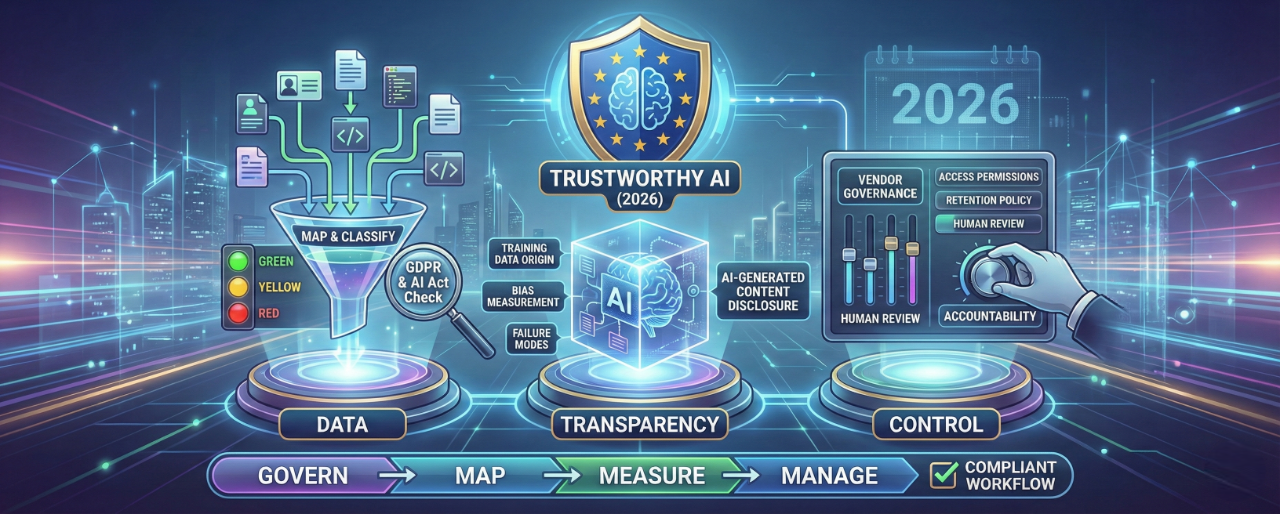

Trustworthy AI in 2026: Data, Transparency, and Control

AI is no longer a “tool for experiments.” It’s becoming infrastructure: used for writing, customer support, recruiting, analytics, product decisions, and content creation.

That also means AI has become a trust topic. Because the moment you use an AI model, you’re making implicit choices about:

This article gives you a high-level, business-friendly framework to use AI responsibly and credibly, plus a concrete checklist you can copy into your internal AI policy.

Most AI failures in companies aren’t about model quality. They’re about mismatched expectations:

In the EU, this trust layer is becoming regulated, especially through the EU AI Act, which entered into force on 1 August 2024 and will become fully applicable on 2 August 2026, with early obligations already in effect (including prohibited practices and AI literacy).

1) What happens to my data?

For most AI platforms, your inputs can include:

That triggers classic data protection thinking:

Under GDPR, security is explicitly required (risk-based) and includes measures like confidentiality, integrity, availability, and resilience.

2) Who is accountable?

Even if a model “decides” something, your organization remains accountable for:

That’s why governance matters more than “picking the best model.”

The EU AI Act follows a risk-based approach and creates obligations for different roles, including providers and deployers (users in business contexts).

A) AI literacy is not optional anymore

From 2 February 2025, the AI Act includes an obligation to ensure a sufficient level of AI literacy for staff and others using AI on your behalf.

Practically: if your team uses AI at work, you should have training and clear rules.

B) Transparency obligations (deepfakes + AI interactions)

The AI Act includes transparency expectations in several scenarios, including:

Even if you’re not building deepfakes, the “labeling and disclosure” mindset is becoming standard practice for trust.

C) General-purpose AI (GPAI) is increasingly regulated

For general-purpose AI models, the Commission has published guidance and supporting documents around obligations (including training data transparency and copyright-related expectations). If you’re using GPAI platforms, this matters because:

Controller vs processor (and why it matters)

If you use an AI platform to process personal data, you must clarify:

If a vendor is a processor, a Data Processing Agreement (DPA) is required (GDPR Art. 28), and the contract must include specific safeguards (instructions, subprocessors, security, etc.).

Security requirements are explicit

GDPR expects “appropriate” measures (risk-based). That’s not just “we use HTTPS”, it’s access controls, logging, incident response, and more.

When is a DPO required in Germany?

Germany’s BDSG sets a widely-cited threshold: appoint a DPO if you regularly employ at least 20 people constantly involved in automated processing of personal data (§ 38 BDSG).

Separately, GDPR Art. 37 requires a DPO in certain cases (e.g., large-scale monitoring or large-scale special-category data processing).

Why a DPO is valuable for AI even when not mandatory

Because AI creates “hidden processing” and “shadow usage” fast. A DPO (internal or external) helps you:

A practical framework for trustworthy AI: Govern → Map → Measure → Manage

If you want one simple mental model, borrow the structure of the NIST AI Risk Management Framework: Govern, Map, Measure, Manage. Here’s how to translate that into a company workflow.

1) GOVERN: Set rules before people “just use AI”

Quick rule that works in practice:

If an employee wouldn’t paste it into a public forum, they shouldn’t paste it into an AI tool unless it’s explicitly approved.

2) MAP: Understand data + risk per use case

For each AI use case, document:

Create a simple 3-level internal classification:

3) MEASURE: Validate reliability, bias, and failure modes

Before using AI outputs in production workflows:

Also document known limitations:

4) MANAGE: Put controls around tools and vendors

A) Vendor checks (minimum set)

B) Security & access controls (minimum set)

C) Transparency controls (minimum set)

Trustworthy AI isn’t achieved by “choosing the right model.” It’s achieved by building a system around AI:

With the EU AI Act’s phased timeline (AI literacy + prohibited practices already applicable, full applicability in 2026), “we’ll figure it out later” is becoming an expensive strategy.

If you want one tangible takeaway: copy the checklist above into your internal wiki and turn it into:

Then review it quarterly with your DPO/security owner.

If you’re using AI on rich media like video/audio, see CHAMELAIONs GDPR data privacy checklist for AI video translation.

Does the EU AI Act affect companies that only use AI (not build it)?

Yes. The AI Act includes obligations for “deployers” (organizations using AI systems), including AI literacy and transparency obligations in certain contexts.

Do we need AI training for employees?

Under the AI Act, organizations should take measures to ensure a sufficient level of AI literacy for staff using AI on their behalf (in effect from 2 February 2025).

When do we need to label AI-generated content?

The AI Act includes transparency expectations for AI-generated/manipulated content (deepfakes) and other cases - best practice is to adopt a clear disclosure standard for synthetic content in comms/marketing.

Is it okay to paste customer or employee data into ChatGPT-like tools?

It depends on your classification and vendor setup. If personal data is involved, you need:

When is a Data Protection Officer mandatory in Germany?

Under § 38 BDSG, companies generally must appoint a DPO if they regularly employ at least 20 people constantly involved in automated processing of personal data. GDPR Art. 37 can also require a DPO in certain high-impact cases.

What’s the #1 mistake companies make with AI and data?

Letting “shadow AI” happen: teams adopt tools individually, data leaks into prompts, and nobody can answer basic questions about retention, training use, or access control. That’s a trust failure - not a technical one.

Translate Hindi and Portuguese video or audio with CHAMELAION. Upload, choose languages, click Translate, then preview and export in minutes.

Translate Spanish and Arabic video or audio with CHAMELAION. Upload, choose languages, click Translate, then preview and export in minutes.